BoltAI v1.11.0 (build 29)

This release added support for GPT-4 Vision, text-to-speech & finetuned models

TL;DR

- New: Added support for the new GPT-4 vision

- New: Better UI/UX for GPT-4 vision, drag and drop into Menubar Icon

- Added text-to-speech using OpenAI API

- New: Support finetuned models

- Fixed an issue with Azure OpenAI Service

GPT-4 Vision

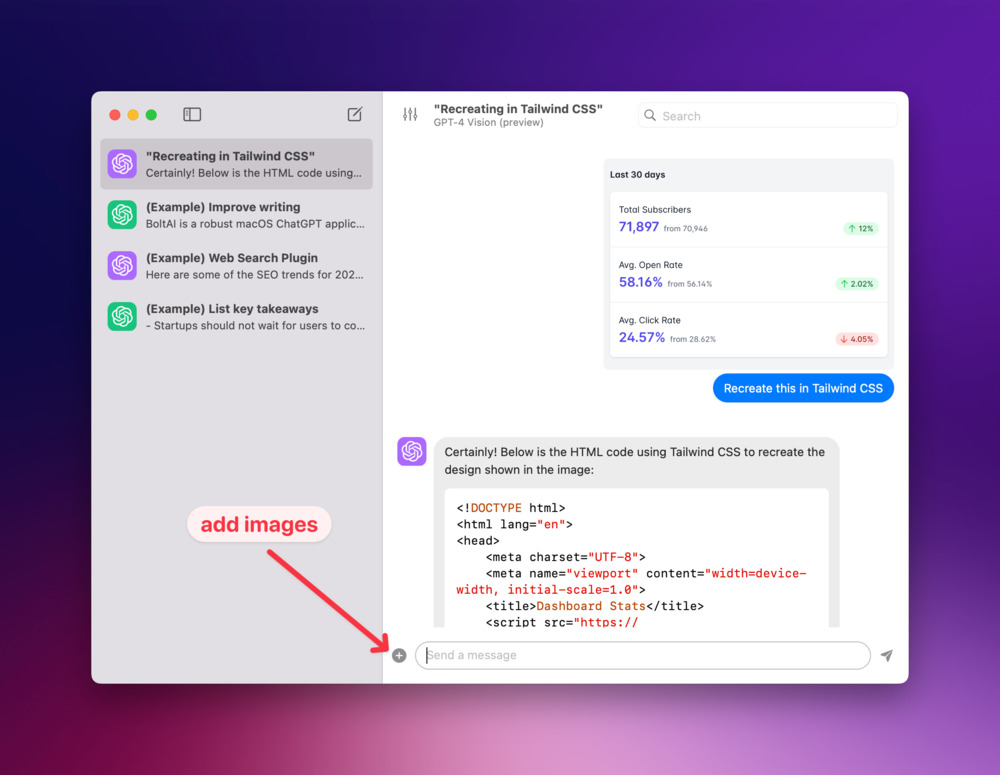

GPT-4 Vision (GPT-4V) is a multimodal model that allows a user to upload an image as input and engage in a conversation with the model. Starting from v1.11, BoltAI supports GPT-4V natively (if your API Key has access)

There are 2 ways you can use GPT-4V with BoltAI:

- Start a new conversation, attach your images and start asking questions

- Drag and drop your images into BoltAI's Menubar Icon

You can attach your images using the Plus button, or by using drag & drop.

Can't wait to hear how you will use this feature. Watch more demo videos below.

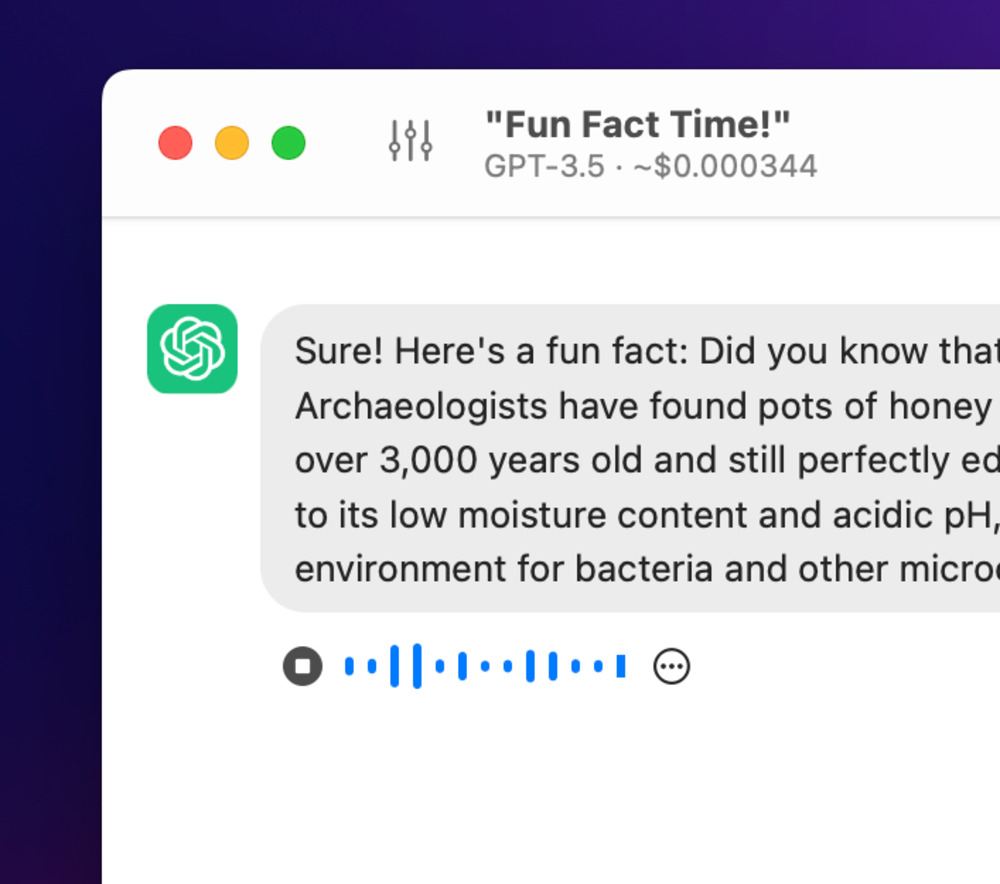

Text-to-speech

In this release, I've also added support for the new text-to-speech API by OpenAI. You can change the voice in Settings > Models > OpenAI

The UX is not ideal yet as there is a minor latency between each speech. In the next version, I will implement a hand-free experience where you can converse with ChatGPT without using keyboard!

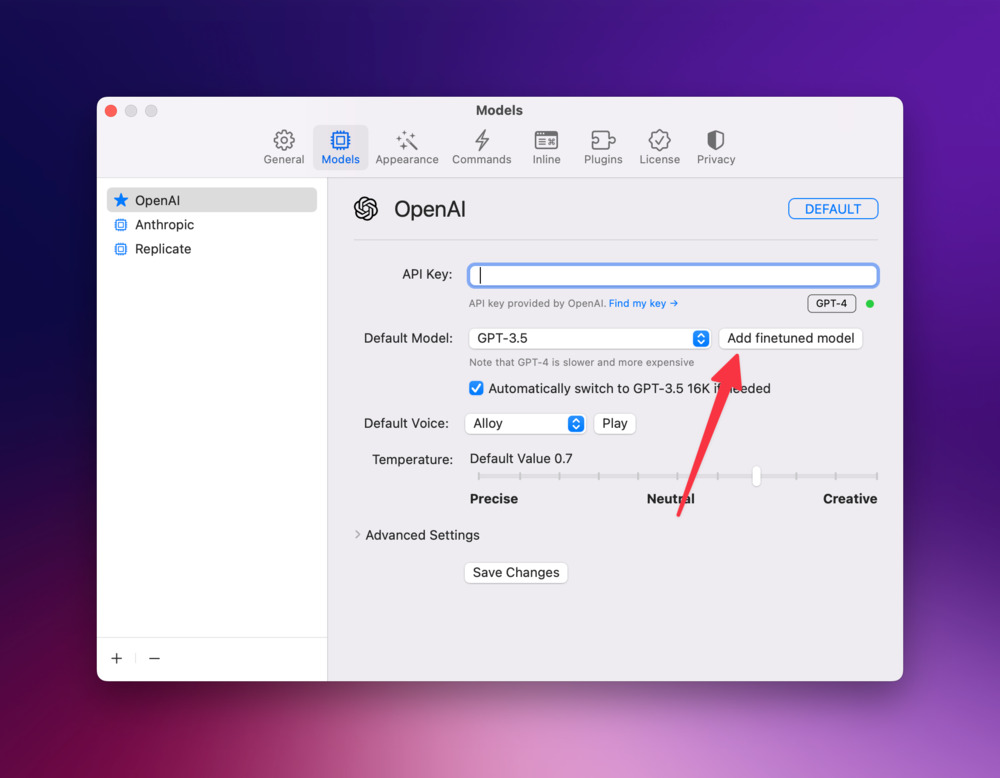

Finetuned models

This has been one of the most requested feature. You can now add your own finetuned models in Settings > Models > OpenAI

Using a finetuned model is pretty straighforward, though I had to tweak the System Instruction for it to work.

If you run into any issue, please let me know.

That's all for now 👋

PS: I'm running a promotion on Black Friday. Grab the deal while it's still available 🎁

If you are new here, BoltAI is a native macOS app that allows you to access ChatGPT inside any app. Download now.